We have begun the task of implementing PerformancePoint Server 2007 (PPS) on top of our existing data marts. Right now we are in the early stages of the requirements gathering. However, I have had a good chance to play with PPS and it is very slick!

I would highly recommend anyone needing a dashboard application to download the evaluation version and spend some time on it. You can download it here.

You will see some more blogs about it in the very near future because some of the stuff this can do is worth writing about. Also, some of the pitfalls that we have hit are interesting as well!

peace

Thursday, December 18, 2008

Wednesday, December 10, 2008

DateTime Columns in Slowly Changing Dimension Component

We had an interesting error when implementing a slowly changing dimension in SSIS this week.

We had a date column that we were passing thru to the database from the source. However, the source was a script task because it came in from a multi-resultset stored procedure. In other data flows, where we were using an OLE DB destination, we typed datetime columns as DT_DATE. However, when we used DT_DATE with the slowly changing dimension component, it threw an error:

peace

We had a date column that we were passing thru to the database from the source. However, the source was a script task because it came in from a multi-resultset stored procedure. In other data flows, where we were using an OLE DB destination, we typed datetime columns as DT_DATE. However, when we used DT_DATE with the slowly changing dimension component, it threw an error:

Error at Import Data [Slowly Changing Dimension]:The input column "input column "ALDATE (15157)" cannot be mapped to external column "external column "ALDATE (15160)" because they have different data types. The Slowly Changing Dimension transformation does not allow mapping between column of different types except for DT_STR and DT_WDTR.After much digging and research, we determined that we had to set the output column type on the script component to DT_DBTIMESTAMP. Once we changed that, the SCD worked just fine!

peace

Friday, December 5, 2008

Importing CSV Files with SSIS

One of the shortcomings of the comma separated value (CSV) file import in SSIS is the inability for SSIS to determine if there are too few fields in a record.

According to Microsoft, they only check for the end of record indicator (Ususally a CR/LF) when processing the last column in the file. If you are not on the last column, and a CR/LF is encountered, it is ignored and the first field of the new line is added to the last column of the previous line.

Here is an example of what you see:

So what I have been doing to gracefully catch and report on this is to quickly pre-process the file to count the columns. Since the processes that create the CSV files for my app are automated, I can assume that every record in the file has the same number of fields, and I only need to count 1 row. If you cannot assume this, it would be just as easy to loop thru each row and determine the field count.

I would also like to point out that my code does not yet handle commas embedded within quote delimited fields. For example, if your record looked like this:

Ok, so here is what I am doing.

I set up a script task in the control flow, before I try to open the file with ssis. This is the code I use:

When this code runs, it generates an error if the field count is not correct.

If you need to check multiple file layouts, you can put the script task into a foreach container in SSIS and use database lookups to get the field length based on the filename, making this code totally reusable!

Also note... I actually converted this code into a C# dll and put it in the GAC on the SSIS server so all I have to do is call that dll in my script task. I will blog about that soon.

Hope this helps!

peace

According to Microsoft, they only check for the end of record indicator (Ususally a CR/LF) when processing the last column in the file. If you are not on the last column, and a CR/LF is encountered, it is ignored and the first field of the new line is added to the last column of the previous line.

Here is an example of what you see:

Now, when troubleshooting this error, you can be looking in the wrong place, since the error usually shows up only at the end of the file.

SSIS is set up to accept 3 columns in a CSV file: Col1, Col2 and Col3.

File comes in like this:

Col1,Col2

A1,A2

B1,B2

C1,C2

When ssis imports this file it produces this result:

Record 1: A1, A2B1, B2

Record 2: C1,C2 Error - Column Delimiter Not Found

So what I have been doing to gracefully catch and report on this is to quickly pre-process the file to count the columns. Since the processes that create the CSV files for my app are automated, I can assume that every record in the file has the same number of fields, and I only need to count 1 row. If you cannot assume this, it would be just as easy to loop thru each row and determine the field count.

I would also like to point out that my code does not yet handle commas embedded within quote delimited fields. For example, if your record looked like this:

1,"this is um, the field", 1,1I will be posting code for that next week sometime.

Ok, so here is what I am doing.

I set up a script task in the control flow, before I try to open the file with ssis. This is the code I use:

Imports System

Imports System.Data

Imports System.Math

Imports Microsoft.SqlServer.Dts.Runtime

Imports System.IO

Public Class ScriptMain

Public Sub Main()

Dim sr As StreamReader

Dim Textline As String

Dim Fields As String()

sr = File.OpenText("C:\FileName.csv")

If Not sr.EndOfStream Then

sr.ReadLine() 'Skip 1 row (Header)

End If

If Not sr.EndOfStream Then

Textline = sr.ReadLine() 'Read in the entire line of text.

Fields = Textline.Split(Convert.ToChar(",")) ' Split the line of text into a string array.

Else

sr.Close()

sr.Dispose()

Dts.TaskResult = Dts.Results.Failure 'There were no records after the header. This might not be a failure in all implementations.

Exit Sub

End If

sr.Close()

sr.Dispose()

If Fields.Length <> 25 Then ' If there are not 25 fields in the record, raise error.

Dts.TaskResult = Dts.Results.Failure

Err.Raise(vbObjectError + 513, Nothing, "Field Count is Invalid! 25 expected, " + Fields.Length.ToString() + " received.")

Exit Sub

End If

Dts.TaskResult = Dts.Results.Success

End Sub

End Class

When this code runs, it generates an error if the field count is not correct.

If you need to check multiple file layouts, you can put the script task into a foreach container in SSIS and use database lookups to get the field length based on the filename, making this code totally reusable!

Also note... I actually converted this code into a C# dll and put it in the GAC on the SSIS server so all I have to do is call that dll in my script task. I will blog about that soon.

Hope this helps!

peace

Tuesday, December 2, 2008

Using GUID Data Type in SQL Command Task

I have now run into this problem several times, so I thought I would write it down.

Just had a colleague come to me with an issue of the SQL Command task not returning the data it should have.

Looking at his stored proc, everything seemed fine. The proc took in a UniqueIdentifier and returned a SELECT from a table.

The SQL Command task in SSIS passed a GUID and put the result set into an object variable.

However, by watching Profiler, we captured that the value being sent to the stored procedure parameter from the SQL Command task was not the GUID that was being processed in SSIS.

So on the parameter mapping tab we changed the data type of the parameter from GUID to VARCHAR(70).

After that the SQL Command returned the rows that were expected.

I hope this helps someone out there

Just had a colleague come to me with an issue of the SQL Command task not returning the data it should have.

Looking at his stored proc, everything seemed fine. The proc took in a UniqueIdentifier and returned a SELECT from a table.

The SQL Command task in SSIS passed a GUID and put the result set into an object variable.

However, by watching Profiler, we captured that the value being sent to the stored procedure parameter from the SQL Command task was not the GUID that was being processed in SSIS.

So on the parameter mapping tab we changed the data type of the parameter from GUID to VARCHAR(70).

After that the SQL Command returned the rows that were expected.

I hope this helps someone out there

Monday, November 10, 2008

Timeouts in SSRS 2005 Web Service and Report Viewer Control

One of the questions that I get asked a lot is how to prevent timeouts when running or exporting large reports using the SSRS web service or the .NET report viewer control.

With the report viewer control, this is fairly simple; the problem is that the property is hiding in the property tab. In the property tab, expand the ServerReport property, and set the timeout value to a large number or to -1 (infinite timeout)

For the web services, the ReportExecutionService instance contains the property Timeout.

In my previous post showing how to use the web service, I neglected to add the timeout property.

Also, when working with the web service in SSRS 2005, you have to keep the IIS timeouts in mind. For example, the session timeout by default is 20 minutes. If you have reports taking longer than that, you would need to increase this setting in IIS. However, if your reports are taking that long, I would consider using SSIS to pre-process the report data on a schedule and then run the report against the pre processed data. That is a much more elegant solution.

peace

With the report viewer control, this is fairly simple; the problem is that the property is hiding in the property tab. In the property tab, expand the ServerReport property, and set the timeout value to a large number or to -1 (infinite timeout)

For the web services, the ReportExecutionService instance contains the property Timeout.

In my previous post showing how to use the web service, I neglected to add the timeout property.

ReportExecutionService re = new ReportExecutionService();

re.Credentials = System.Net.CredentialCache.DefaultCredentials;

re.Timeout = -1;

Also, when working with the web service in SSRS 2005, you have to keep the IIS timeouts in mind. For example, the session timeout by default is 20 minutes. If you have reports taking longer than that, you would need to increase this setting in IIS. However, if your reports are taking that long, I would consider using SSIS to pre-process the report data on a schedule and then run the report against the pre processed data. That is a much more elegant solution.

peace

Tuesday, November 4, 2008

WANTED: Your Opinion and Thoughts...

Ok, So I have been a bit slack in the blog department.

I have been finding it hard to break out of the everyday rut of work to post anything.

Well, that has to change. I need to get out of this rut, to challenge myself, to expand my horizons. After all, the project I am working on is quite interesting, why not share?

So I have been thinking... Is anyone out there interested in a daily or weekly email newsletter for BI? Maybe a forum, etc? User donated articles, etc? Sort of like Code Project, except for BI? A place where I can get everyone involved, rather than just myself.

I would like to hear some feedback, as well as thoughts or ideas.

Meanwhile, I have to get back to work. I have an article on the ForEach SSIS Task almost complete. I will try to post it today.

peace

I have been finding it hard to break out of the everyday rut of work to post anything.

Well, that has to change. I need to get out of this rut, to challenge myself, to expand my horizons. After all, the project I am working on is quite interesting, why not share?

So I have been thinking... Is anyone out there interested in a daily or weekly email newsletter for BI? Maybe a forum, etc? User donated articles, etc? Sort of like Code Project, except for BI? A place where I can get everyone involved, rather than just myself.

I would like to hear some feedback, as well as thoughts or ideas.

Meanwhile, I have to get back to work. I have an article on the ForEach SSIS Task almost complete. I will try to post it today.

peace

Tuesday, September 9, 2008

CERN Powers Up The Large Hadron Collider Overnight

Well... On Wednesday, at 3:30AM EDT, the Large Hadron Collider will be powered up.

I guess we will know shortly if it will unleash many tiny black holes that will consume the Earth.

Or better yet, a time machine :)

http://www.foxnews.com/story/0,2933,419404,00.html

peace

Look out for black holes...

I guess we will know shortly if it will unleash many tiny black holes that will consume the Earth.

Or better yet, a time machine :)

http://www.foxnews.com/story/0,2933,419404,00.html

peace

Look out for black holes...

Friday, September 5, 2008

Determining if a File Exists in SSIS Using Wildcards

In some instances I have had to deal with files that did not have a set name. In these cases, I have a handy script file to determine if the files are there.

(Another way to handle this is to use the ForEach task and use a wildcard in there, but I am going to blog about that next week)

First, I add a variable to my package. I usually call this variable FileExists and set it as a Boolean, with a default value of False.

I then add a script task to my control flow. In the Read/Write variable property, I add User::FileExists.

In the script editor, I use this script:

This searches c:\ for a file *.txt. The Length property of FileInfo is the number of files found. If this is greater than zero, I set the FileExists variable to True.

After closing the script editor, and clicking OK on the script task property window, I am ready to set up my precedence constraint.

I then add my next task to the control flow and drag the flow control from the script task to the new task. I right click on the constraint and select edit. I select Expression in the Evaluation Operation drop down box. For the expression I use: @[User::FileExists]==true. This way, the only way the next task is executed is if files exist. If the don’t exists, the package ends gracefully.

You could add another task to log the fact that there were no files, connect the script to that task, and set the expression to: @[User::FileExists]==false.

peace

(Another way to handle this is to use the ForEach task and use a wildcard in there, but I am going to blog about that next week)

First, I add a variable to my package. I usually call this variable FileExists and set it as a Boolean, with a default value of False.

I then add a script task to my control flow. In the Read/Write variable property, I add User::FileExists.

In the script editor, I use this script:

Imports System

Imports System.Data

Imports System.Math

Imports Microsoft.SqlServer.Dts.Runtime

Imports System.IO

Public Class ScriptMain

Public Sub Main()

Dim di As DirectoryInfo = New DirectoryInfo("c:\")

Dim fi As FileInfo() = di.GetFiles("*.txt")

If fi.Length > 0 Then

Dts.Variables("User::FileExists").Value = True

Else

Dts.Variables("User::FileExists").Value = False

End If

Dts.TaskResult = Dts.Results.Success

End Sub

End Class

This searches c:\ for a file *.txt. The Length property of FileInfo is the number of files found. If this is greater than zero, I set the FileExists variable to True.

After closing the script editor, and clicking OK on the script task property window, I am ready to set up my precedence constraint.

I then add my next task to the control flow and drag the flow control from the script task to the new task. I right click on the constraint and select edit. I select Expression in the Evaluation Operation drop down box. For the expression I use: @[User::FileExists]==true. This way, the only way the next task is executed is if files exist. If the don’t exists, the package ends gracefully.

You could add another task to log the fact that there were no files, connect the script to that task, and set the expression to: @[User::FileExists]==false.

peace

Tuesday, September 2, 2008

How to Use the SSIS Script Component as a Data Source

Recently I had the pleasure of working with a data source that returned all of the transactions with 1 stored procedure. However, this meant the stored procedure returned 14 result sets, and an output parameter.

The question was how to get the 15 result sets into SSIS. The answer: Use a Script Component.

Here is the path I took to handle this:

Making the Connection

All connections in SSIS are handled thru connection managers. We will have to have a connection manager to connect to the source database to execute the stored procedure.

In the Connection Manager tab of SSIS, right click and select New ADO.NET Connection…

Setup your connection properties, click on Test Connection. Once you have a good connection, you are ready for the next step.

Setup a Script Component

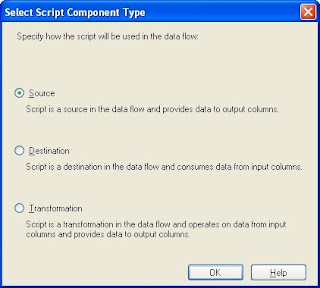

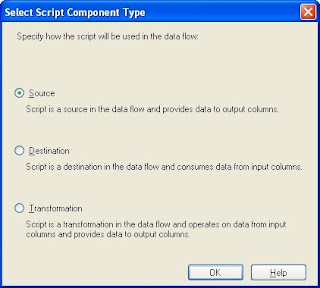

I added a script component to my data flow task. When asked if it was a Source, Transformation or a Destination, I selected Source.

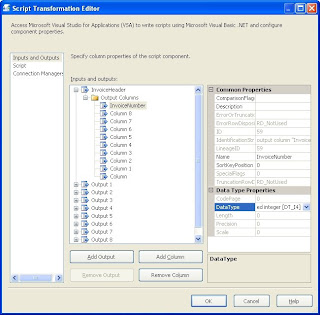

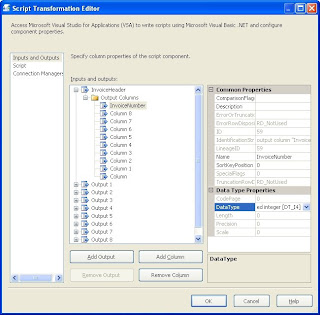

I then added a total of 15 outputs. (14 for the result sets, 1 for the output parameter) To do this, I clicked on the Inputs and Outputs tab, and clicked on the Add Output button until I have 15 outputs.

Then came the fun part: adding, naming and typing all of the columns for all of the outputs. On the same Inputs and Outputs tab, I selected the first output, renamed it to the result set name. Then I opened up the output in the tree view, and expanded the Output Columns folder. I clicked on the Add Column button until I had as many columns as the first result set.

Once the columns where in the tree view, I selected the first one, changed the name, set the data type and size, and moved onto the next column, until they were complete.

Then I did the same for each output in the component.

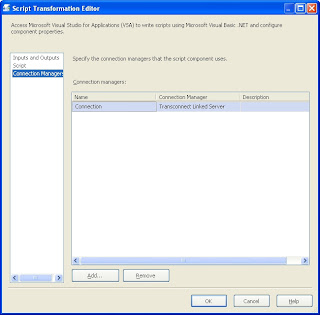

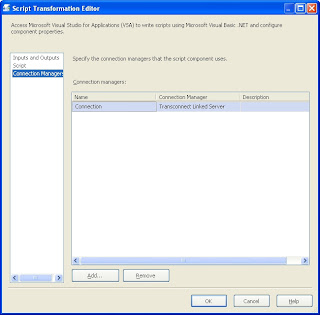

The final step here is to configure the script component to use your newly created connection manager. To do this, click on the Connection tab and add a new connection. Set the name, and then in the middle column, choose your connection manager.

Scripting the Outputs

The next step is to tie together the stored procedure and the script component outputs. To do this, click on the script tab and click the Design Script button to open the scripting window.

I added 2 subroutines to handle opening the connection and executing the stored procedure:

The AcquireConnections subroutine is called by SSIS when it is ready to open the database connections. I override it to make sure the database connection is ready to use.

Likewise, the PreExecute is called when it’s time to get the data. (This should clear up some of the long running PreExecute issues out there.) I open our SQL Reader here and execute the source stored procedure.

Now comes the fun part. Linking the result sets to the output columns.

This is done in the CreateNewOutputRows subroutine:

This code goes thru each result set in the SQL Reader and assigns the value of the result set to the output column. I did not show all of the columns or all of the outputs since it’s the same concept for each.

Once that is done, I clean up after myself:

This closes the SQL Reader and releases the connection to the database.

Once this is done, close the script window and click on OK on the script component properties.

The script component will now have multiple outputs that you can select from when linking it to another data flow component.

Conclusion

I hope this will help you when you need to return multiple result sets from stored procedures into SSIS. If you are familiar with VB.NET coding, you should pick this up easily, and even if not, the example at least gives you the basic steps and something to copy from.

peace

CodeProject

The question was how to get the 15 result sets into SSIS. The answer: Use a Script Component.

Here is the path I took to handle this:

Making the Connection

All connections in SSIS are handled thru connection managers. We will have to have a connection manager to connect to the source database to execute the stored procedure.

In the Connection Manager tab of SSIS, right click and select New ADO.NET Connection…

Setup your connection properties, click on Test Connection. Once you have a good connection, you are ready for the next step.

Setup a Script Component

I added a script component to my data flow task. When asked if it was a Source, Transformation or a Destination, I selected Source.

I then added a total of 15 outputs. (14 for the result sets, 1 for the output parameter) To do this, I clicked on the Inputs and Outputs tab, and clicked on the Add Output button until I have 15 outputs.

Then came the fun part: adding, naming and typing all of the columns for all of the outputs. On the same Inputs and Outputs tab, I selected the first output, renamed it to the result set name. Then I opened up the output in the tree view, and expanded the Output Columns folder. I clicked on the Add Column button until I had as many columns as the first result set.

Once the columns where in the tree view, I selected the first one, changed the name, set the data type and size, and moved onto the next column, until they were complete.

Then I did the same for each output in the component.

The final step here is to configure the script component to use your newly created connection manager. To do this, click on the Connection tab and add a new connection. Set the name, and then in the middle column, choose your connection manager.

Scripting the Outputs

The next step is to tie together the stored procedure and the script component outputs. To do this, click on the script tab and click the Design Script button to open the scripting window.

I added 2 subroutines to handle opening the connection and executing the stored procedure:

Public Class ScriptMain

Inherits UserComponent

Private connMgr As IDTSConnectionManager90

Private Conn As SqlConnection

Private Cmd As SqlCommand

Private sqlReader As SqlDataReader

Public Overrides Sub AcquireConnections(ByVal Transaction As Object)

connMgr = Me.Connections.Connection ‘This is the connection to your connection manager.

Conn = CType(connMgr.AcquireConnection(Nothing), SqlConnection)

End Sub

Public Overrides Sub PreExecute()

Dim cmd As New SqlCommand("Declare @SessionID int; Exec spgTransactions @SessionID OUTPUT; Select @SessionID", Conn)

sqlReader = cmd.ExecuteReader

End Sub

The AcquireConnections subroutine is called by SSIS when it is ready to open the database connections. I override it to make sure the database connection is ready to use.

Likewise, the PreExecute is called when it’s time to get the data. (This should clear up some of the long running PreExecute issues out there.) I open our SQL Reader here and execute the source stored procedure.

Now comes the fun part. Linking the result sets to the output columns.

This is done in the CreateNewOutputRows subroutine:

Public Overrides Sub CreateNewOutputRows()

'Invoice Header

Do While sqlReader.Read

With Me.InvoiceHeaderBuffer

.AddRow()

.InvoiceNumber = sqlReader.GetInt32(0)

.InvoiceDate = sqlReader.GetDate(1)

'etc, etc, etc for all columns.

End With

Loop

sqlReader.NextResult()

'Invoice Detail

Do While sqlReader.Read

With Me.InvoiceDetailBuffer

.AddRow()

...

'more outputs and more columns

' until we get to the last result set which will be the output parameter (SessionID)

sqlReader.NextResult()

'Session ID

'We know this result set has only 1 row

sqlReader.Read

With Me.SessionIDBuffer

.AddRow()

.SessionID = sqlReader.GetInt32(0)

End With

sqlReader.Read 'Clear the read queue

End Sub

This code goes thru each result set in the SQL Reader and assigns the value of the result set to the output column. I did not show all of the columns or all of the outputs since it’s the same concept for each.

Once that is done, I clean up after myself:

Public Overrides Sub PostExecute()

sqlReader.Close()

End Sub

Public Overrides Sub ReleaseConnections()

connMgr.ReleaseConnection(Conn)

End Sub

This closes the SQL Reader and releases the connection to the database.

Once this is done, close the script window and click on OK on the script component properties.

The script component will now have multiple outputs that you can select from when linking it to another data flow component.

Conclusion

I hope this will help you when you need to return multiple result sets from stored procedures into SSIS. If you are familiar with VB.NET coding, you should pick this up easily, and even if not, the example at least gives you the basic steps and something to copy from.

peace

CodeProject

Friday, August 29, 2008

Thursday, August 28, 2008

SSIS 2008 Sets Record

First of all, hello from the depths of an enterprise ETL project!

I haven't been working on reporting at all in the last few months, dedicated to ETL and optimization.

Second, I realize that this article is from February, but I thought it was a really good read.

http://blogs.msdn.com/sqlperf/archive/2008/02/27/etl-world-record.aspx

peace

Wednesday, May 28, 2008

Removing Recent Projects in .NET

I recently had a need to cleanup my "recent project list" in BIDS.

Since you cannot do this from the .NET UI, you will have to resort to messing with the registry.

That being said... Open up RegEdt32 using the Run command from the start menu.

Navigate to:

(for .NET 2003, use 7.1 instead of 8.0, for .NET 2002, use 7.0)

Then, from the right hand window, select the values that you do not want, and press delete. The value names are File1, File2, ...

Be aware that you will need to renumber these to be in order from 1 on...

If they are not in exact sequential order, they will not load on the start page. This is not documented on the Microsoft KB article (http://support.microsoft.com/kb/919486)

peace

Since you cannot do this from the .NET UI, you will have to resort to messing with the registry.

*** Serious problems might occur if you modify the registry incorrectly!!! ***

*** Modify the registry at your own risk!!! ***

That being said... Open up RegEdt32 using the Run command from the start menu.

Navigate to:

HKEY_CURRENT_USER\Software\Microsoft\VisualStudio\8.0\ProjectMRUList

(for .NET 2003, use 7.1 instead of 8.0, for .NET 2002, use 7.0)

Then, from the right hand window, select the values that you do not want, and press delete. The value names are File1, File2, ...

Be aware that you will need to renumber these to be in order from 1 on...

If they are not in exact sequential order, they will not load on the start page. This is not documented on the Microsoft KB article (http://support.microsoft.com/kb/919486)

peace

Monday, March 31, 2008

View of 1999...From the 1960's

Here is another interesting view of the future from the mid 60's... again, they seem to have gotten the concepts correct, but not the implementation...

And they also didn't see the social norms changing. The wife does the shopping while the husband grimaces and pays for it!

From:

View of 1999 from the '60s

peace

BobP

And they also didn't see the social norms changing. The wife does the shopping while the husband grimaces and pays for it!

From:

View of 1999 from the '60s

peace

BobP

New Small SSIS Features in 2008

I have posted on Microsoft connect to request new features and have met with about a 75% success rate.

Among my requests:

Hide a column in a matrix

Problems with autohide tool boxes

Here is a good write up on 2 new features for SSIS that Jamie Thomson requested via Connect and they will be in SSIS 2008:

http://blogs.conchango.com/jamiethomson/archive/2008/03/20/ssis-some-new-small-features-for-katmai.aspx

peace

BobP

Among my requests:

Hide a column in a matrix

Problems with autohide tool boxes

Here is a good write up on 2 new features for SSIS that Jamie Thomson requested via Connect and they will be in SSIS 2008:

http://blogs.conchango.com/jamiethomson/archive/2008/03/20/ssis-some-new-small-features-for-katmai.aspx

peace

BobP

Thursday, March 27, 2008

A view of 2008... from 1968

This is an interesting article from "Modern Mechanix" magazine from 1968.

Click here for article

I find it very interesting that missing one small piece of information skews the entire article. For example, he talks about "Not every family has its private computer. Many families reserve time on a city or regional computer to serve their needs." and "TV-telephone shopping is common." He did not see a computer in every home that is connected to every other computer via the internet.

But apart from that, it is a good article, and many of the concepts are a reality today, although not in the same form as he anticipated.

peace

Bob

Click here for article

I find it very interesting that missing one small piece of information skews the entire article. For example, he talks about "Not every family has its private computer. Many families reserve time on a city or regional computer to serve their needs." and "TV-telephone shopping is common." He did not see a computer in every home that is connected to every other computer via the internet.

But apart from that, it is a good article, and many of the concepts are a reality today, although not in the same form as he anticipated.

peace

Bob

Friday, January 11, 2008

ReportingService2005 Web Service

Well, I know it’s been a while, but I am finally back after the holidays!

The next phase of our reporting project is about to take off, and I had a little down time while waiting on requirements.

So, using the ReportingService2005 web service, I created a report deployment application in C#.

It allows me to select the directory of my RDL/RDS files, and then select my report server and any folder on that server.

Then it deploys the selected reports to the report server with the one button click.

I can then change the report server, and deploy the same reports to that server. This is helpful if you have multiple systems that should stay in sync. Using dynamic web service connections allows me to change the target server within code.

Working with the web service was rather straightforward, and allowed me to do everything I needed to do as far as deploying reports and data sources.

However, what I wanted to write about was a catch that had me stumped for a few minutes.

When you use the CreateReport method of the web service, the data sources are not linked to the server data sources. So what I did was use the GetItemDataSources method to get the report data sources, built a new data source in code, representing the server data source, and then used the SetItemDataSources method to update the report data sources.

This nicely updated the report data sources to use the server shared data sources.

All in all, the 1½ days I spent writing this app were well worth it. I learned about the deployment methods of the web service, and kept my coding skills up, plus I have a nice deployment utility for reports!

peace

The next phase of our reporting project is about to take off, and I had a little down time while waiting on requirements.

So, using the ReportingService2005 web service, I created a report deployment application in C#.

It allows me to select the directory of my RDL/RDS files, and then select my report server and any folder on that server.

Then it deploys the selected reports to the report server with the one button click.

I can then change the report server, and deploy the same reports to that server. This is helpful if you have multiple systems that should stay in sync. Using dynamic web service connections allows me to change the target server within code.

Working with the web service was rather straightforward, and allowed me to do everything I needed to do as far as deploying reports and data sources.

However, what I wanted to write about was a catch that had me stumped for a few minutes.

When you use the CreateReport method of the web service, the data sources are not linked to the server data sources. So what I did was use the GetItemDataSources method to get the report data sources, built a new data source in code, representing the server data source, and then used the SetItemDataSources method to update the report data sources.

DataSource[] dataSources = rs.GetItemDataSources(TargetFolder + "/" + ReportName);

foreach (DataSource ds in dataSources)

{

if (ds.Item.GetType() == typeof(InvalidDataSourceReference))

{

string dsName = ds.Name.ToString();

DataSource serverDS = new DataSource();

DataSourceReference serverDSRef = new DataSourceReference();

serverDSRef.Reference = "/Data Sources/" + dsName;

serverDS.Item = serverDSRef;

serverDS.Name = dsName;

DataSource[] serverDataSources = new DataSource[] { serverDS };

rs.SetItemDataSources(TargetFolder + "/" + ReportName, serverDataSources);

}

}

This nicely updated the report data sources to use the server shared data sources.

All in all, the 1½ days I spent writing this app were well worth it. I learned about the deployment methods of the web service, and kept my coding skills up, plus I have a nice deployment utility for reports!

peace

Subscribe to:

Posts (Atom)